In this post, I’ll go over the technical decisions that went into making a self-driving car out of LEGO and an iPhone 13 Pro.

What?

One boring winter day, I decided that my coding skills are getting rusty and I need a new side project. Accidentally, I stumbled upon one of the LEGO Technic cars that has a Bluetooth interface and it all clicked together - I am building a self-driving car out of LEGO and an iPhone! (easy, right?)

LEGO 4X4 X-treme Off-Roader (42099), this is how it looked before I massacred it

Why iPhone?

Car needs a way to “see” what is around, ideally in 3D. There are multiple ways to do that, for example stereo cameras are a common solution here.

However, turns out that new iPhones have a LIDAR built-in, as well as cameras. I was lucky to get iPhone 13 Pro few months before that, so there was no need to buy any extra hardware.

iPhone 13 Pro camera block

iPhone’s LIDAR outputs a semi-dense point cloud with 49152 points per frame. This is more than enough for this use case.

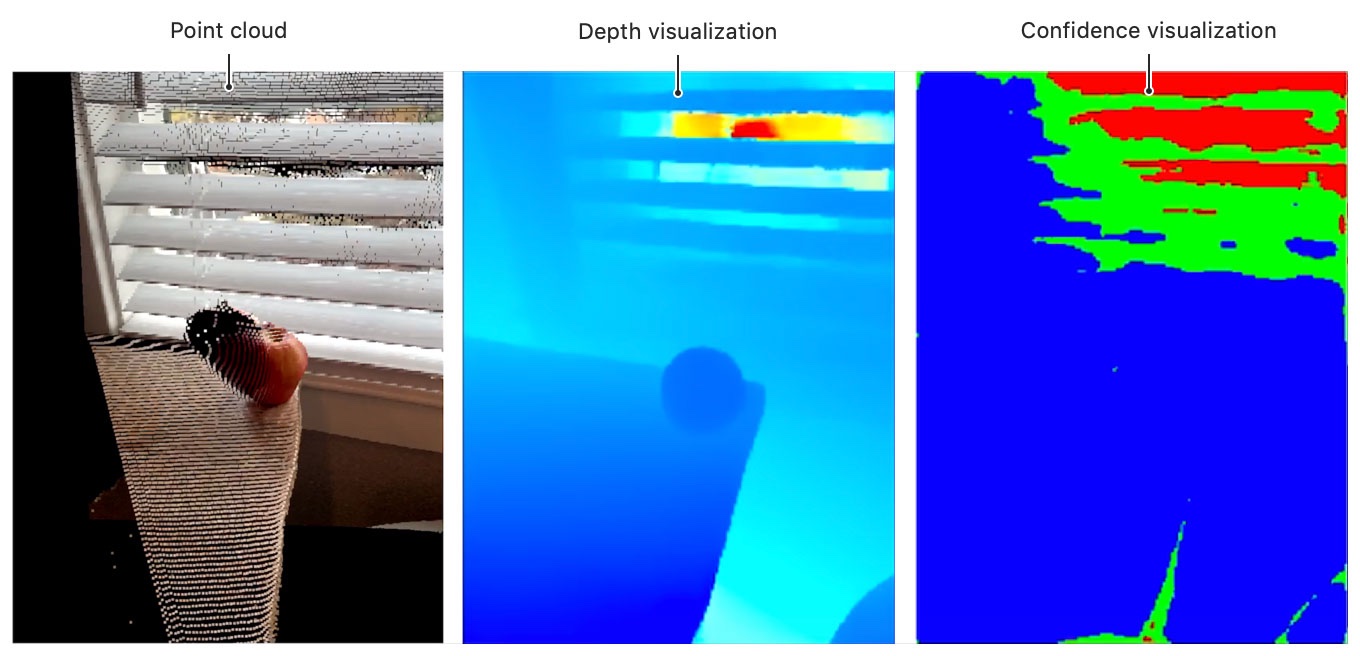

Example of a semi-dense 3D point cloud

Breakfast with friends through the 'lens' of a LIDAR

Where will the “car brains” be?

This is a tricky one. Basically there are two main options:

- Run everything on an iPhone itself

- Run everything on a computer and only use an iPhone as a sensor

I picked the latter because it allows me to move forward much faster, as I don’t really have any experience with iOS development. Furthermore, if I want to move from iPhone + MacBook to a cheaper camera + raspberry pi setup, only small code change will be required.

Overview of the system

However, I know at least one example where a similar car was built and ran completely on an iPhone.

Regarding a computer in question, I just use a base M1 MacBook Air 2020. It is a surprisingly powerful machine with a novel ARM CPU. All that without an active cooling system!

What’s the architecture like?

My first intention was to use Robot Operating System, which is a de-facto set of libraries for robotics. Unfortunately, it does not fully support new M1 CPUs. I tried running it with Ubuntu in docker and this works, but docker on macos is not very robust and the network performance I got was way worse than on a host system.

This led me to building my own set of libraries to mimic parts of ROS that I wanted to use. More specifically, it allows one to have a nice distributed architecture where each node runs independently and they talk to each other via message passing. For example, Bluetooth Node does not care who sends commands, so you can have nodes for automatic and manual control if you want to interfere (too late, the car is in the wall).

Data flow between nodes

After a few days of python and asyncio coding, I had a simple abstraction above RabbitMQ. It allows to send and subscribe to messages, mostly 2D arrays.

Communication between an iPhone and a computer happens via wifi. There is a simple http server that expects iPhone to send data at 30 Hz (FPS). Each request is around 150 kb and consists of:

- 480x360 gray-scale camera image

- 192x256 depth map with cm precision

- Gyroscope and accelerometer data

Turns out, 2.4GHz wifi is not good enough, but 5GHz works well and allows to have a stable 30 FPS data stream.

Here we also locked in our performance requirements - the whole system should work at 30 frames per second. This means each node should process a frame under 33 ms.

What’s next?

In the next part (click here!) we will look at an assembled car, play with bluetooth, crash into a wall (multiple times) and build a first end-to-end autonomous scenario.

First task will be to drive randomly, avoiding walls. Like roomba!